Visual SLAM: Mapping & Localization of Mobile Robots

Utilizing monocular cameras and ROS for robust mapping and precise localization.

Project Information

- Keywords: Robot Perception,Machine Vision, Active Perception, ROS2, Sensor Interfacing

- Supervisor & Mentor: Asst. Prof. Manoj Kumar Guragai

- Team:

- Ranish Devkota, Gokarna Baskota, Sadanand Paneru, Shuruchi Yadav

Facility used: Robotics club

Project Overview

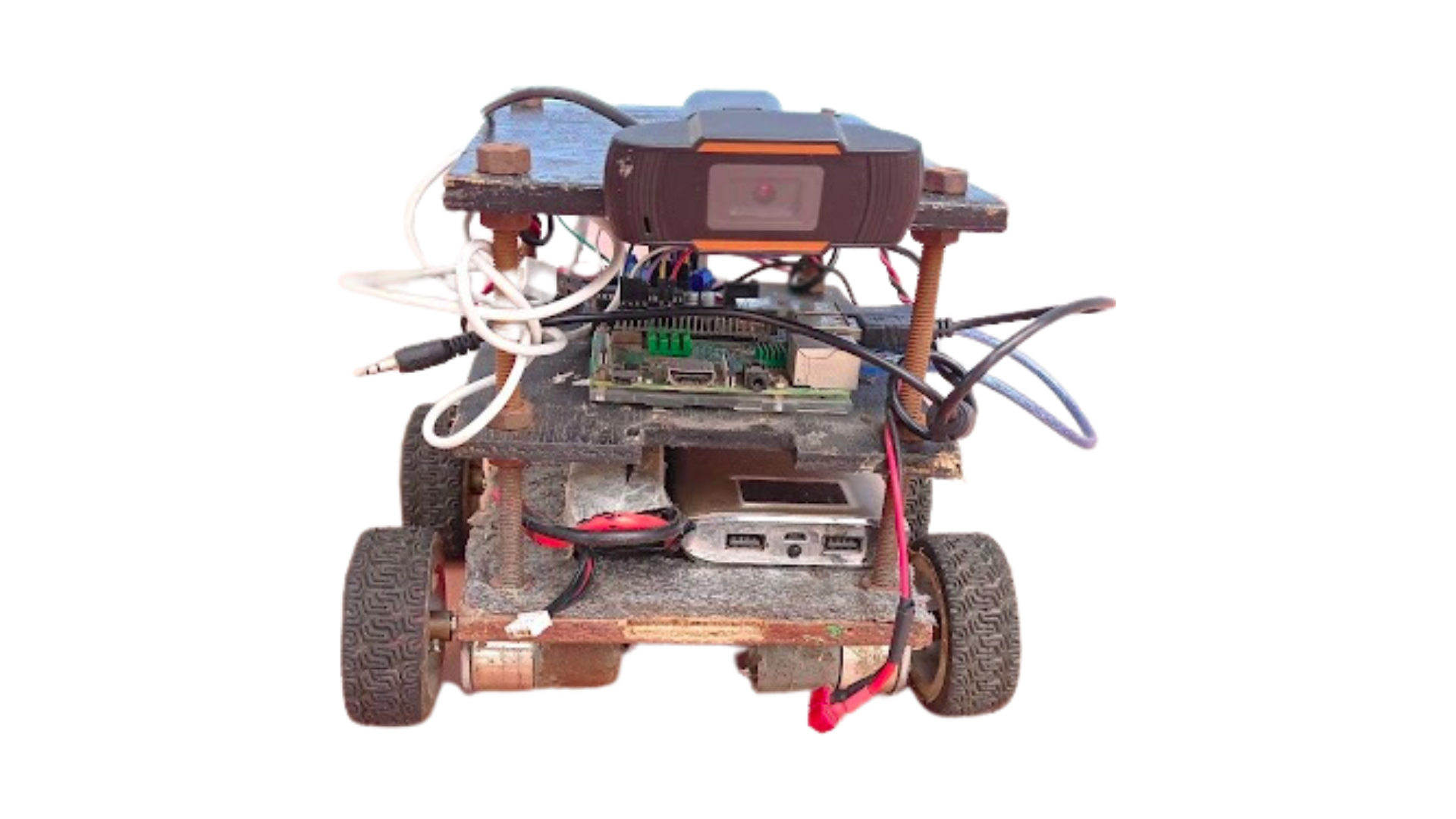

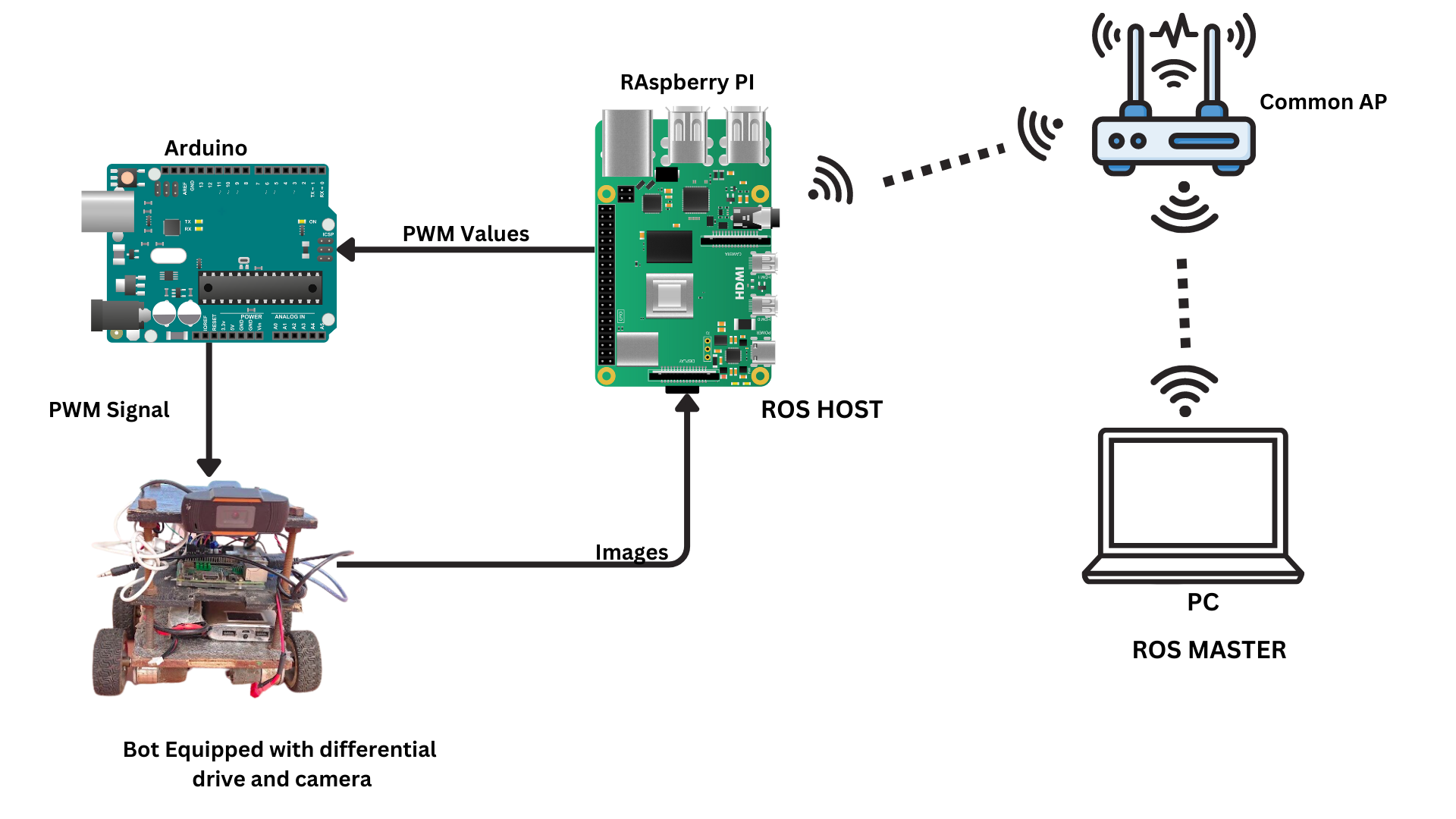

This project focuses on implementing V-slam algorothm with monocular usb camera. Project relays on Keyframe extraction as well differentials in features among adjacent features with Bundle Adjustment using the Stella VSLAM algorithm and ROS2. The Computational Offloading had been featured implementing Robot end node as well as computer end node while Raspberry pi as well as arduino was used for robot control and Ros2 implementations.

Explanation

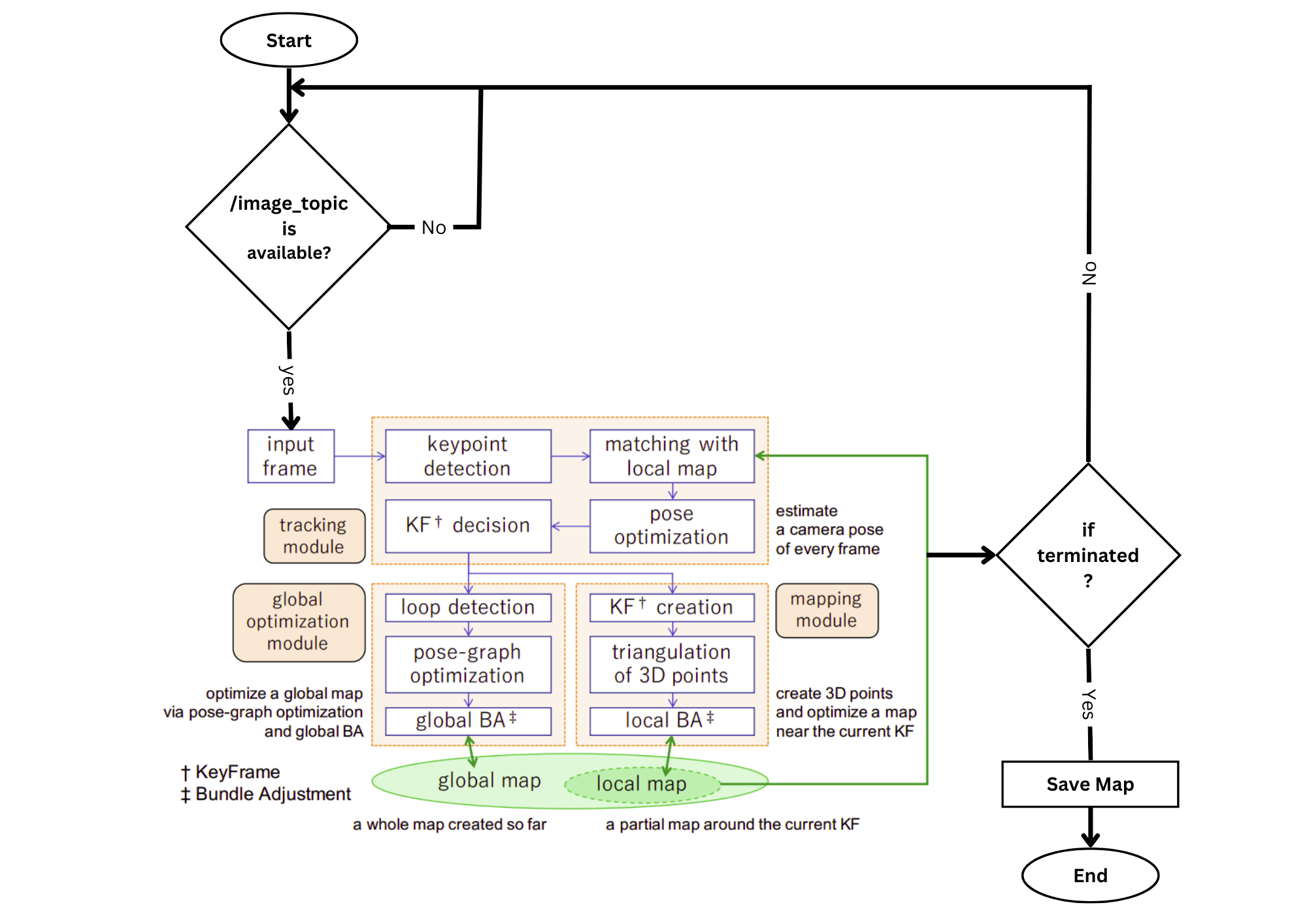

V-SLAM (Visual Simultaneous Localization and Mapping) is a technique that allows a robot to build a map of its environment

while simultaneously localizing itself within that map. This project utilized a monocular camera to capture visual data,

which was processed in real-time using the Stella VSLAM algorithm integrated with ROS2. The algorithm works by feature extraction

of keyframes and change in keyframes in adjustant images coupled with Bundle adjustjment for mapping via single Monocular camera.

Methodology

The project followed these key steps:

Results

.png)

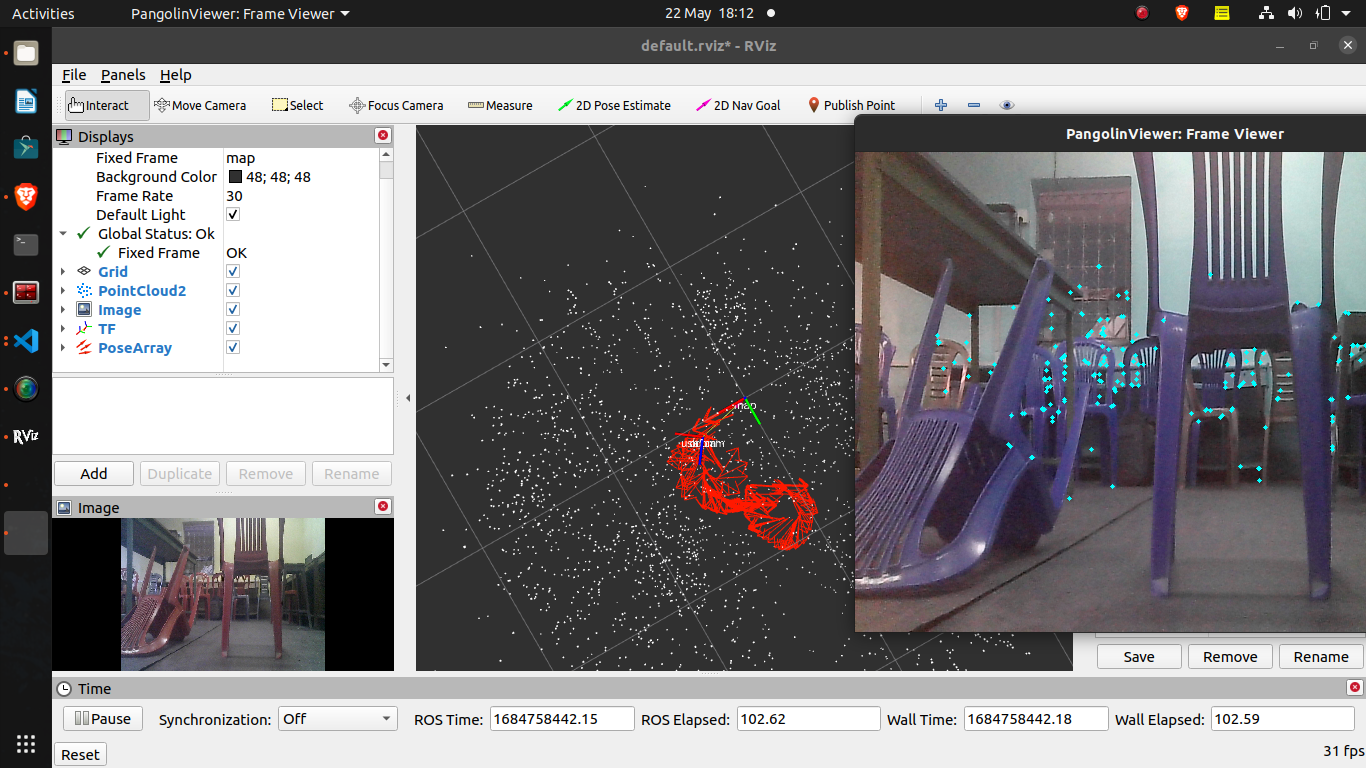

The robot successfully mapped indoor environments with significant accuracy. The Localization had been performed in mapped environment as well as capable of performing SLAM taskes with single camera. The system demonstrated minimal drift and robust performance in dynamic conditions, such as changing lighting and obstacles.

Key Findings

Conclusion

This V-SLAM implementation proved effective for autonomous mobile robots, offering a cost-efficient alternative to traditional LIDAR-based systems. Future improvements includes